Reports

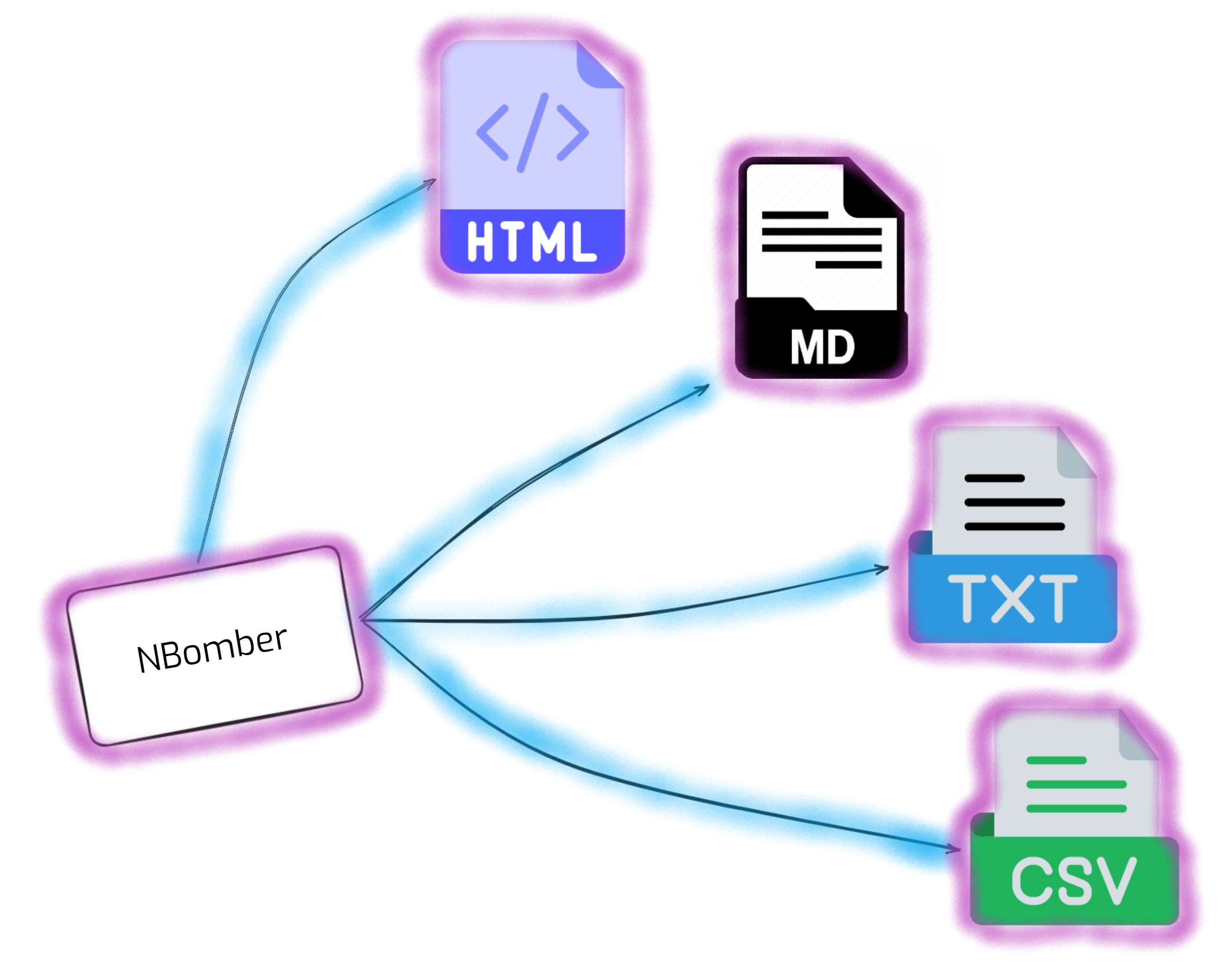

Reports generated by NBomber are vital for understanding how your application performs under stress. They provide detailed insights into various performance metrics, helping you identify bottlenecks, optimize performance, and ensure a smooth user experience. NBomber supports generating reports in various formats to cater to different needs and preferences. Here are the supported formats:

How to read reports data

Let's consider the following report result data.

| step | stats |

|---|---|

| name | my step |

| request count | all = 18030, ok = 18030, fail = 0, RPS = 100 |

| latency (ms) | min = 33, mean = 133, max = 1728, StdDev = 104 |

| latency percentile (ms) | p50 = 109, p75 = 139, p95 = 380, p99 = 563 |

| data transfer (KB) | min = 49, mean = 49, max = 49, all = 879 MB |

Latency metrics

Providing insights into the responsiveness of your application under load. All latency numbers are shown in milliseconds. Here’s a detailed description of the various latency metrics you’ll encounter in NBomber reports:

- min (ms) - The minimum time taken to get a response from the application. Indicates the best-case response time. It’s useful for understanding the fastest possible performance under ideal conditions.

- mean (ms) - The average time taken for a response across all requests. Provides an overall view of the typical response time. It helps gauge the general performance but can be affected by outliers.

- max (ms) - The maximum time taken to get a response. Shows the worst-case response time. This metric is important for understanding the upper bounds of response time under load, which can impact user experience during peak usage.

- StDev - A measure of the variation or dispersion of the response times. Indicates the consistency of response times. A low standard deviation means the response times are close to the mean, suggesting stable performance. A high standard deviation points to significant variability, which could impact user experience unpredictably.

Latency percentiles

A classic problem with metrics is that there are "outliers" in the system that skew the results. Maybe there are bad cases out there that would throw off and affect on mean or max values. As a consequence, we can use values that are less sensitive to noise, which are called percentiles. You should use percentiles to get a more rounded perspective. Focus on the percentiles to understand the distribution of response times. High p99 values might indicate occasional spikes in latency that could affect user experience.

- p50 (ms) - The response time of 50% requests. Represents the median response time, offering a clear picture of the typical performance experienced by half of the users.

- p75 (ms) - The response time of 75% of the requests. Useful for understanding the performance experienced by the majority of users, particularly in scenarios where consistent performance is crucial.

- p95 (ms) - The response time of 95% of the requests. Highlights the upper range of response times that most users will experience. It’s essential for identifying performance issues that affect a small but significant portion of users.

- p99 (ms) - The response time of 99% of the requests. Focuses on the tail end of the response time distribution, indicating the performance experienced by the slowest 1% of requests. This metric is critical for understanding outliers and ensuring performance consistency.

Throughput metrics

They provide insights into how many requests your application can handle within a given time frame and the success rate of those requests. Consistently high RPS with a low error rate indicates good performance under load. A high error rate requires investigation into the causes of failures.

- RPS (request per second) - The number of requests processed by the application per second. A high RPS indicates that the application can efficiently handle a large number of requests, while a low RPS might suggest potential performance bottlenecks or limitations in handling concurrent users.

- all - The total number of requests sent during the test.

- ok - The number of requests that were successfully processed and returned a valid response.

- fail - The number of requests that failed to process successfully.

Data transfer metrics

These metrics provide insights into the efficiency of data handling and the impact of data volume on application performance. The data size heavily impacts the performance of the system. The change shift from 1KB to 500KB can significantly increase the response latency.

- all (MB) - The total number of MB transferred during the test.

- min, mean, max (KB) - The min, mean, max values of data transfer metrics.

One of the key goals for a high-performance system is to deliver a high rate of requests per second (RPS), indicating the ability to handle a large volume of concurrent requests, along with low response latency, ensuring that users experience minimal wait times, ideally less than 1 second. Typically, as the number of client requests increases, RPS will grow to a certain extent, but this is often accompanied by an increase in latency, meaning users will have to wait longer for responses.

How NOT to Measure Latency

"How NOT to Measure Latency" by Gil Tene.

Reports API

The Reports API in NBomber is designed to facilitate the generation and retrieval of detailed test reports. It provides the ability to get different report formats, modify the report, and upload it to a desired destination.

WithoutReports

This method should be used to run NBomber wihtout any output reports.

NBomberRunner

.RegisterScenarios(scenario)

.WithoutReports()

.Run();

WithReportFormats

This method should be used to specify which report formats should be generated.

NBomberRunner

.RegisterScenarios(scenario)

.WithReportFormats(

ReportFormat.Csv, ReportFormat.Html,

ReportFormat.Md, ReportFormat.Txt

)

.Run();

WithReportFolder

This method should be used to specify the output folder for the report's session. Default value: reports.

NBomberRunner

.RegisterScenarios(scenario)

.WithReportFolder("my-reports")

.Run();

WithReportFileName

This method should be used to specify the report file name.

NBomberRunner

.RegisterScenarios(scenario)

.WithReportFileName("mongo-db-benchmark")

.Run();

Upload report to custom destination

There could be cases when we need to upload report results to remote storage (e.g., FTP, S3). NBomber provides access to the file content, allowing you to retrieve and upload it to the desired destination.

var result = NBomberRunner

.RegisterScenarios(scenario)

.Run();

var htmlReport = result.ReportFiles.First(x => x.ReportFormat == ReportFormat.Html);

var filePath = htmlReport.FilePath; // file path

var html = htmlReport.ReportContent; // string HTML content

// { code to upload to desired destination }

Reports modification

There could be cases when you need to filter out some of the metrics data from your report. One example can be a "pause step" or "hidden step" that you can create and use but don't want to see in final reports.

WithReportFinalizer

This method allows the adjustment of NodeStats data before generating the reports. You can use it to filter out unnecessary metrics.

Example 1:

NBomberRunner

.RegisterScenarios(scenario)

.WithReportFinalizer(data =>

{

var scnStats = data.ScenarioStats

.Where(x => x.ScenarioName != "hidden")

.ToArray();

return ReportData.Create(scnStats);

})

.Run();

Example 2:

NBomberRunner

.RegisterScenarios(scenario)

.WithReportFinalizer(data =>

{

var stepsStats = data.GetScenarioStats("mongo-read-write")

.StepStats

.Where(x => x.StepName != "pause")

.ToArray();

data.GetScenarioStats("mongo-read-write").StepStats = stepsStats;

return data;

})

.Run();